Low Resolutions Postmortem

Low Resolutions was my first solo attempt at making a larger scale game. The intent was for Low Resolutions to be a 10-15 minute experience that explored difficult choices and the impact those choices have on the people making them. My aim with the project was to create as much as possible myself to push myself to learn a range of new areas (audio and animation).

Risk management

Low Resolutions was going to be the largest game that I’d attempted to create largely by myself. That created a lot of potential risks both in terms of the game design and the game mechanics. My normal approach would be to focus on the highest risk items first (in this case the level design and narrative). However, to test the level design I also needed to have some of the high risk gameplay elements in place first (unlockable doors and a companion character).

Because of this dependency I decided to create the core elements first in a test level starting with the unlockable door system and then the companion character. Let’s take a look at each of those elements in more detail.

Unlockable doors and the ant line system

The doors, from a mechanical point of view, are quite simple. They are a few primitives that are animated using Mecanim and a controlling script that tracks whether the door is locked or unlocked. If the door is unlocked then the door registers the presence of the player or the companion using a trigger volume on either side. If the door is locked then the controlling script ignores any of the trigger volume events. That side was straightforward, but how would the unlocking happen? And how would the unlocking be tied to the choice the player makes?

The player needed to be able to make these important choices in the world. And I didn’t want those choices to be a simple matter of pick an option and the choice is immediately made and the door unlocks. A key theme of the game was that these choices were difficult and the player should be thinking about them. I wanted to create the space for that thinking to occur. This meant that the player had to take some action and for a period of time keep performing that action for the door to unlock.

My solution here was to use familiar touchstones and to use a pressure plate (aka. trigger pad) that the player would step on and a series of connecting elements (ant lines) that linked to the door. The ant lines would allow the player to see a visual indicator of their progress. Conceptually the ant lines are simple and follow some basic rules:

- When the player steps on a trigger pad it activates the ant line element next to it

- The ant line element waits a time and then activates the next element

- Each element activates the next until it reaches the door at which point it unlocks it

- If the player steps off of a trigger pad then the ant line can reverse if the door is not unlocked

- The last element that is active turns off after a short delay

- When that element turns off it tells the previous element to turn off, also after a short delay

- That process continues back towards the trigger pad until all of the ant lines are deactivated

Conceptually the process was quite simple. However, my implementation had a lot of issues and it does not work in a way that I am happy with for a couple of reasons:

- The time to activate/deactivate is controlled by the animation. When the animation hits a set point it tells the ant line that it needs to activate/deactivate the next/previous element as appropriate.

- The end result of this is it gets messy if trying to deactivate an element that is mid activation or activate an element that is mid deactivation.

- The elements have no overall controller. Each one manages it’s own state.

- This means that the elements need to talk to each other a lot to work around the first issue without breaking.

- Stepping on a trigger pad as an ant line is deactivating does not reactivate it.

- This was implemented to avoid bugs due to switching state mid activation/deactivation.

Why did it end up like this? A lack of planning due to overconfidence. I believed I was very familiar with implementing such a system and could do so without any planning. I was wrong. I made something that worked but it’s fragile and has required a lot of bug fixing that took time away from other areas.

Here’s what I’m going to do differently in future:

- Follow the advice I give my students and plan things when I haven’t implemented them multiple times previously.

- Pick one ant line element (the trigger pad) to be the authoritative element that can manage the overall state of the ant line.

- Redesign the activation/deactivation logic to be interruptible at any point in time.

Companion character

The companion character was another area that I dived into without too much planning. However, in this case it wasn’t as dumb as it sounds. Companion AI is something that I have implemented a lot of times and the requirements here were very simple the AI needed to:

- Follow the player and keep out of their way

- Be able to move to a location and either hold there and watch the player or play an animation

- Patrol between points

All three were areas that I had implemented many times previously so I followed the same approaches I’ve used before:

- The character was controlled using a very simple finite state machine (watch player, patrol, idle, perform animation)

- Locations that the companion could move to were able to be given particular markup that would indicate an animation to play or an action to take

These core behaviours worked very well and the implementation of them was straightforward. The trickiest part was the interaction between the AI and doors. Because the doors could open/close the companion needed to know that a path existed through them. However, the doors also could be locked so the companion needed to ignore trying to move through locked doors. The solution here, which I’ve used previously, was:

- The doors have an obstacle that cuts out the navmesh when closed. That obstacle is turned off when the door is open.

- The doors have an ‘off mesh link’ that bridges the isolated sections of navmesh when the door is locked.

- If the door is locked then the off mesh link is disabled.

- When the companion reaches the off mesh link the companion AI switches off Unity’s navmesh agent movement and turns on it’s own logic. This allowed me to precisely control where the AI moved and at what speed. I found that the default navmesh agent movement resulted in jittery movement or the AI becoming stuck.

Overall I’m very happy with how the companion behaves. The finite state machine worked well and the AI was able to patrol and play animations reliably. The door solution, while not optimal, also worked well enough to allow the companion AI to move reliably through the world. However, there are some things that I would do differently in future:

- The finite state machine for the behaviours was very tightly integrated into the AI code (literally the same script). While that didn’t present an issue for this project due to the limited capabilities required it does make maintenance and extension of that behaviour more problematic.

- A better solution would be to split the behaviours out and allow them to be customised by attaching different components. That will make it easier to extend and customise the AI behaviours going forward.

- The custom steering control for doors worked well but the transition between door movement and the navmesh agent movement did at times result in glitches an teleporting

- In future I want to shift the AI over to using fully my own code for all steering behaviours (not just doors). This will give me more control over how the AI moves throughout the world. My preference here would be to port Recast + Detour to Unity. I’ve used the system before and seen how robust and fast it is so it makes a good alternative to implementing my own solution fully.

- The behaviours for the companion are not well exposed to external scripting systems. This is on both sides: level scripting talking to the companion; and the companion talking to the level scripting.

- I’ll touch on this in more detail in the next section but in the future I want to treat the AI as a first party citizen in Fungus. That will make it easier to script interactions between the AI and the game world (and vice versa).

Level scripting

Early n in development I setup my own level scripting system. That system was able to do two main things:

- Call functions on another script

- Set variables on another script

The antlines, doors, trigger pads and the AI were all setup to support this custom level scripting system. However, the system was very basic. It functioned by finding things by name and then using reflection to call functions and set variables. It did work but typos would result in errors and seeing all of the pieces of how the scripting fit together was problematic.

Part way through development I integrated a plugin called Fungus. Fungus is a visual based scripting system that provides a lot of functionality in particular for conversations. I was going to need the ability to display basic conversations and I didn’t want to develop my own system so it seemed a good option. The more I used Fungus though the more I realised it could do everything my own scripting system did and a lot more and it did everything better.

The end result of this is that in the final game there is very little of my own scripting system left. Any bugs that I ran into with my own scripting system my solution was to move that logic over to Fungus. Any new scripting was setup using Fungus by default. The choice to do that rather than persist with a system that mostly worked turned out to be a good one. Fungus was the far better choice for many reasons:

- With Fungus I could build the level scripting visually. This made it substantially easier to visualise what was happening and to keep track of all of the scripting.

- Fungus allowed for more complex logic (variables and conditionals) which meant more game logic could be in the overall level scripting rather than in code making it easier to maintain.

- Fungus was easy to extend to support any custom systems that I added (such as the hologram intensity being controllable via Fungus).

Going forward I’ll be fully removing my initial scripting system in favour of Fungus and setting up tight integrations with Fungus for areas such as the companion AI. So far I have only made limited use of the event system in Fungus and this seems like a good area to make more use of as well. That would allow me to integrate the code in a more user friendly way with Fungus rather than having the scripts explicitly running a block (which is also more prone to typo related errors).

Designing hard choices

Deciding on the difficult choices that the player would make was not easy. My main goals with the choices were:

- Both possible options were reasonably balanced. The meant both choices needed to have positive and negative aspects and a roughly similar level of each. If one choice meant no deaths and one meant hundreds that wasn’t a fair balance.

- Where possible I wanted to avoid choices that would bring in too much of people’s own inherent biases. I wanted people to struggle with the choices rather than quickly pick an option because it lined up with their views.

- The choices needed to have ‘weight’. I wanted the choices to require serious thought and that meant the choices had to have substantial impact on people.

This wasn’t an easy process. Not only did I need to work out what choices the player would make I also needed to convey those choices in a suitable way. That meant writing dialogue which is not an area that I have much experience with. My approach to working out the choices was:

- Identify a potential scenario (eg. anti LGBT legislation)

- For that potential scenario I would plan out what the two options would be and what the key characteristics of them would be (eg. allow legislation to pass vs outing someone)

- I would then iterate and refine on the scenario by running it by other people. What I was looking for when getting input from others was:

- Their decision took time. If they were able to make the choice immediately then it wasn’t causing them to think deeply about it which meant the framing of the choice (or the choice itself) was flawed.

- Regardless of their choice they could articulate specific reasons for making it. I didn’t want people choosing options arbitrarily. I was specifically aiming for people to make very deliberate choices and to have solid reasons. In particular I was aiming for the choices causing people to try to justify their choice as being ‘right’.

- They had a clear understanding of what the impact was of either option. If the choices weren’t clear in terms of what they were asking then that was obviously a major problem.

- Based on that iteration and refinement I would tweak the framing of the scenario based on the feedback.

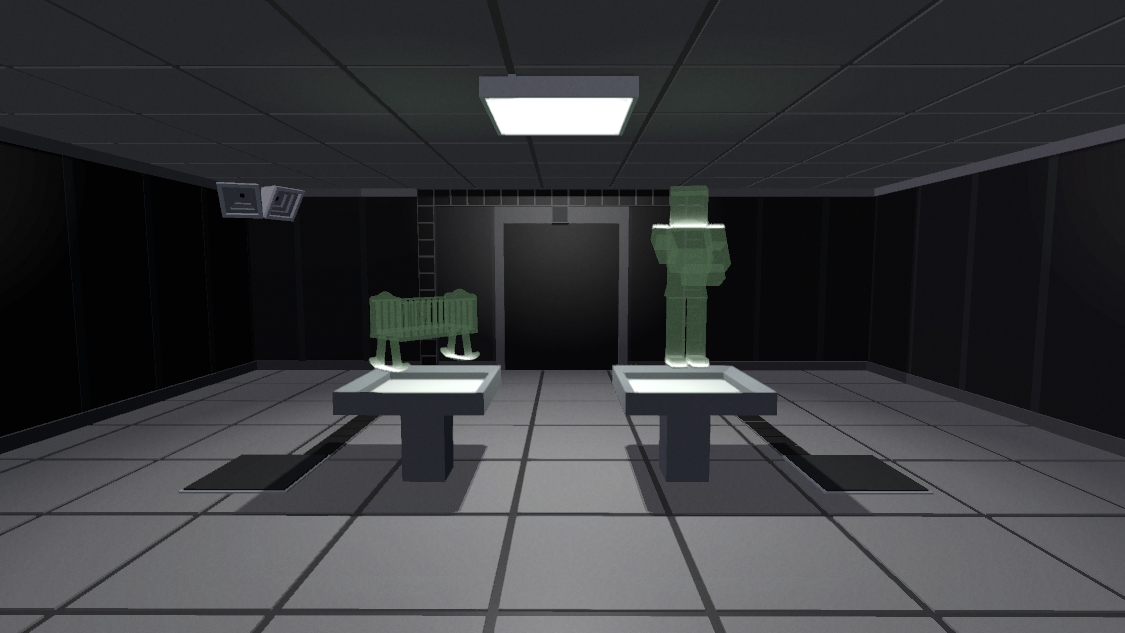

- Once I was happy with the framing of the scenario I put together the visuals for the holograms. Concept art is again not my strong suit so I enlisted help from someone who could come up with concept art which worked well.

- The final stage was writing the dialogue for both the speaker and the companion. For this I relied heavily on the information that I had refined through talking over the scenario with other people.

- Once the dialogue was written and the models were in I would then playtest the room looking for any bugs or inconsistencies in how things flowed. Once that checked out I would get input from others on that spciic room.

Overall the process worked well. It wasn’t a fast process but in part I put that down to my inexperience at narrative design. I’d like to say that by the end it did get easier but I don’t feel that it did. However, I do feel I have a better understanding of the scale of work involved and how to approach it in a way that works.

This is an area that I want to get better at. As a result my future plans involve doing a lot more of this. The more I engage with the narrative design area, the more feedback I get and the more I try to learn about it the better I will become. Essentially: research, practice and feedback.

Playtesting

Throughout the development I had done regular playtesting of isolated sections. Both testing it myself and having others test it. Once all of the pieces were integrated then I setup to have a much larger scale playtest involving several people. For that playtest there were several things I was looking for:

- Did people struggle with the choices?

- Did making the choices have any emotional impact on the player?

- How did people feel about the companion and the voice on the speaker?

As far as emotional impact and how people felt about the characters those questions I asked directly. For the struggling with choices my approach was to ask general questions related to the decision process. I did also ask people if they were left or right handed. The reason for that was if people were making a choice because they had to rather than for a specific reason, were they biased towards their dominant hand.

How did the playtesting go though? Great. I got a lot of feedback telling me that people felt nothing for the companion and the choices didn’t weigh on them much. Why is that great though when I was specifically wanting there to be impact? It was great because the feedback was clear and very detailed. All of the playtesters provided a lot of very useful feedback and suggestions that meant I had the foundations needed to make the game have the impact I wanted.

I still believe I could have approached the playtesting and feedback better. It didn’t get to me that people weren’t being impacted by the game. The game was failing to do it’s job right and as it’s creator my job was to trust that feedback and fix the game so it could impact people. The main improvement with playtesting though would have been to start it earlier. Rather than waiting to the end I could (and should) have playtested months earlier. That would have saved me a bunch of rework and given me more time to further refine and iterate on the game.

Polish and refinement

The feedback from the playtesting very clearly revealed that for all of the playtesters the game was failing to create the response in players it was intended to. No one felt any connection to the companion character or any difficulty with the decisions. Both were a substantial failing on the part of the game and needed to be fixed. The difficulty was in how to fix them. I wanted to keep my fixes very focused and if possible wanted to avoid a substantial rewrite of large sections. My solutions here were:

- Gave the companion character a name. It should have occurred to me sooner but not giving the companion a name inherently dehumanised them so it wasn’t surprising it was hard for people to form any connection with them.

- Add text prompts over the holograms. At times people weren’t clear what choice they were making. The solution here was to add a short text prompt that would appear over each hologram to indicate what choice it corresponded to.

- Expand the initial dialogue for the companion (now called Alex). During the initial meeting in the prison cell Alex would now talk at much greater length to the player to build up more of a connection.

- Add set piece events for Alex in every room. In every room Alex would perform a specific sequence (typically and animation and some dialogue). Initially, these were used to prompt the player about the text prompts. Later they became a way for Alex to express more of his back story. Alex shared more about themselves and also voiced their dislike for the voice on the speakers and what was being done. Alex could articulate the frustration and anger the player was feeling but could not express.

- Make all dialogue auto close after a short time. This removed the need for the player to click which meant they could just read. That helped the dialogue flow better.

- Add reverb zones to all of the rooms. This helped the soundscape feel more consistent. The difference was subtle but it helped make the audio more consistent.

- Colour the different ant lines in the final room and hide those in the other room. Initially the ant lines in the final room were all jumbled up. It simply never occurred to me to hide the ones which weren’t in your room. Hiding the ones that weren’t present meant it visually looked better/cleaner. Colouring the two options (free yourself or Alex) also helped indicate the different choices plus provided an explanation for the different behaviour of those trigger pads.

Overall the fixes worked well. The next round of playtesting and feedback received substantially better results. People were being impacted by their choices and were struggling to make them. That was the result I was aiming for and the game was finally achieving the desired result and was ready for release.

However, there are some further improvements going forward. At the moment the auto closing of the dialogue is based on a fixed time. It doesn’t relate to how long the text is and does not provide any ability for the player to customise the time. That is a huge oversight as everyone has different reading speeds. By not providing people the ability to change those speeds I’m potentially isolating a section of players that read at different speeds. Going forward that is something that I intend to fix with both a user override and an automatic system based on the number of words.

Conclusion

Overall I’m exceptionally happy with Low Resolutions. It was the largest solo game project I have attempted and pushed me out of my comfort zone on many different fronts. I learned a lot in the process of developing it and I’ll be able to take that learning through into my next projects.

Low Resolutions is available on itch.io here.